Latest

Move over Google, AI is the new doctor in town!

With more and more people turning to AI for health related queries, studies show AI-assisted diagnoses may help, but only when paired with trained human judgment.

Author

Author

- admin / 3 months

- 0

- 6 min read

Author

Who would have thought that for every minor inconvenience or health concern – be it a skin rash, a persistent cough, or a random ache—we’d be turning to ChatGPT for answers? In an era where artificial intelligence is at our fingertips, AI chatbots are fast becoming the new front line for health queries.

Patients are no longer just relying on doctors or symptom-checker websites but consulting large language models (LLMs) such as ChatGPT and Gemini for advice on symptoms, diagnoses, and even treatment options. This shift has sparked global debate, raising questions about accuracy, access, ethics, and the evolving relationship between patients and medical professionals.

What are the benefits of using AI for health advice?

A 2024 Australian study published in the Medical Journal of Australia found that 9.9% of adults had used ChatGPT to obtain health-related information within the first six months of its widespread availability. Usage was higher among individuals with low health literacy, who lived in capital cities or for whom English was not the first language.

For some, AI offers reassurance, especially when used with caution. Simran Mudgal, a Delhi-based journalist, said she turned to ChatGPT out of curiosity after experiencing post-recovery pain from tuberculosis.

“Doctors had already said the pain wasn’t serious, but I wanted to see what ChatGPT would say. It gave similar explanations, nothing alarming, so I felt reassured,” she said. “Had it suggested something drastic, I would’ve consulted a doctor immediately. But since the information felt harmless, I took it with a pinch of salt.”

Studies however, have shown more mixed results when it comes to whether it is an effective diagnostic tool. There is no doubt that AI chatbots offer a number of potential benefits in healthcare settings. They can generate fast answers, reduce the burden on overworked medical staff, and serve as an educational resource for patients. A 2023 study in Scientific Reports showed that such artificial intelligence powered tools could simplify complex health literature into more readable formats, particularly for people with limited literacy or medical knowledge.

However, a separate study published in JAMA Network Open evaluated physicians, including those using GPT‑4 alongside traditional diagnostic tools, and found that “the use of an LLM did not significantly enhance diagnostic reasoning performance compared with the availability of only conventional resources.”

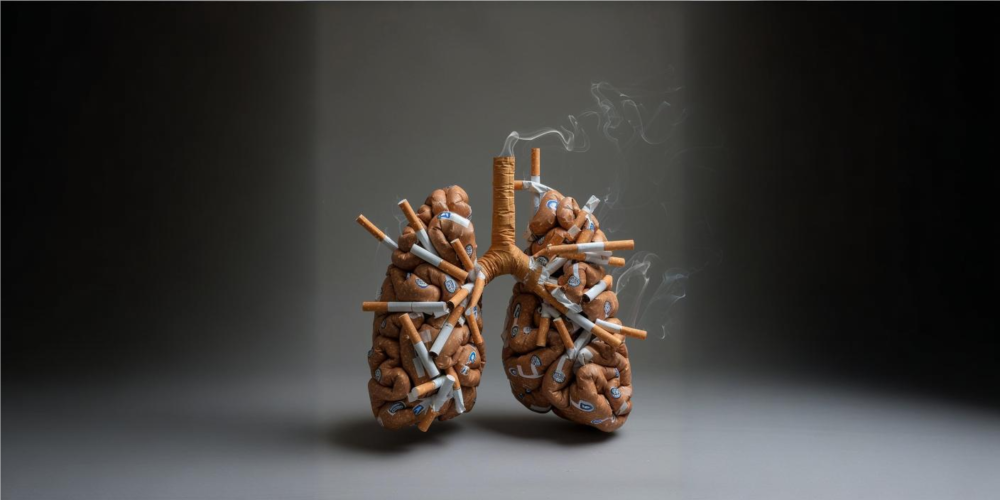

What are the risks of relying on AI for health advice?

Despite their promise, AI chatbots also come with considerable risks. According to a study published in PLOS ONE, ChatGPT is not accurate enough to be used as a standalone diagnostic tool. The researchers evaluated ChatGPT 3.5 using 150 real-world clinical case vignettes sourced from Medscape. While the model provided the correct diagnosis in its top answer for 49% of cases, its overall diagnostic accuracy across multiple-choice options was 74%. The study further cautions that ChatGPT often struggles with interpreting laboratory values and imaging results, and it may overlook key clinical details.

“ChatGPT in its current form is not accurate as a diagnostic tool. ChatGPT does not necessarily give factual correctness, despite the vast amount of information it was trained on. Based on our qualitative analysis, ChatGPT struggles with the interpretation of laboratory values, imaging results, and may overlook key information relevant to the diagnosis. However, it still offers utility as an educational tool,” the authors warned in the July 2024 paper.

Doctors like Dr Gita Prakash, an Internal Medicine Specialist based in Delhi, acknowledge the growing presence of AI tools like ChatGPT in patient behaviour, but stresses the irreplaceable value of human interaction in medicine.

“Earlier, patients would often Google their symptoms before visiting. Now, they have options like ChatGPT or other chatbots,” she said. “There’s nothing inherently wrong with AI in healthcare, but it works with limited input. It doesn’t know your full medical history, family background, or pre-existing conditions like diabetes or hypertension.”

Dr Prakash explains that medical diagnosis is often shaped by subtle cues, something AI can’t fully grasp.

“When we examine a patient, we ask questions dynamically—based on what we see, what we sense, and even what we feel emotionally,” she said. ”A chatbot can’t assess where the pain is, how severe it feels, or whether it’s linked to stress or anxiety. That kind of nuance comes from physical presence.”

Other concerns arise from how people interact with AI-generated health advice. A recent paper in the Journal of Medical Artificial Intelligence revealed that while AI tools like ChatGPT may enhance efficiency and assist in clinical workflows, they also pose critical risks. These include overreliance on AI without adequate human oversight, the use of biased or incomplete datasets, and ethical uncertainties around data privacy, consent, and accountability.

Dr Prakash also warned that incomplete information fed into AI systems could result in false or misleading conclusions. According to her, while such tools may not be inherently dangerous, relying on them without medical supervision can delay or distort diagnosis. In her view, in-person consultations provide an irreplaceable sense of confidence, empathy, and trust, elements that AI cannot replicate.

Moreover, the use of AI in clinical settings is creating tension in doctor-patient relationships. A widely reported case from Australia involved a patient filing a formal complaint after their physician openly used ChatGPT during a consultation. The incident raised questions about professionalism and transparency in how AI tools are integrated into practice.

Where do we draw the line with AI in healthcare?

Experts agree that while AI can support clinical decision-making, it must be used under human supervision. A 2023 report by the National Center for Biotechnology Information emphasises the need for clear regulatory frameworks, transparency, and clinician training when implementing the transformative technology in healthcare settings.

Similarly, a 2025 study published in AI in Health and Medicine stressed that these tools need rigorous evaluation for safety and efficacy before being widely deployed in patient-facing applications.

The emergence of AI chatbots in medicine presents a compelling mix of opportunity and caution. Artificial intelligence could help fill gaps in the healthcare system and improve outcomes for patients if used thoughtfully as a tool, not a replacement. But without guardrails, training, and oversight, it risks becoming just another source of misinformation in an already crowded digital landscape.

Also read: How AI, IoT and drones are winning the mosquito war in Andhra Pradesh